Let’s break down how subjective randomness allows us to cut out all other influences. Remember that our definition of subjective randomness had 3 parts: 1) sensitivity to conditions (or influences); 2) non-repetition of conditions; and 3) a lack of knowledge of the conditions. In this post, we’ll look at just part 1 of this definition.

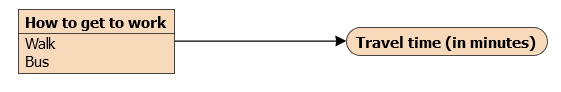

In posts gone by, we explored how we could estimate the causal effect of how we get to work on travel time without pesky confounders messing up our measurements. We came up with a list of possible confounders, things that could affect both our choice of how to get to work and travel time. Including these in our causal model made it look like this:

Basing our choice on a coin toss would cut out the influences on how we get to work, which would also remove the confounding. But wouldn’t some of these influences, like mood and energy, also affect the coin toss anyway? So perhaps the new causal model would be something like this:

All the influences into how to get to work have been cut by the green arrow. The black arrows that remain are pretty straightforward. For example, it’s reasonable to say that the more energy you have, the less time it will take you to walk to work. But those grey arrows are a bit odd. We know mood and energy will affect the coin toss outcome, but we’re a bit fuzzy on how. For example, we can’t just say the more (or less) energy you have, the more likely you are to flip a head. In fact, it’s not really clear what we can say about the relationship between energy and the coin toss outcome, other than that there is one. Will this create an indirect association between coin toss and travel time, that isn’t due to the effect of the coin toss (via how to get to work) on travel time? If so, that would mess up our causal measurements — and so we wouldn’t have gained anything by adding the coin toss.

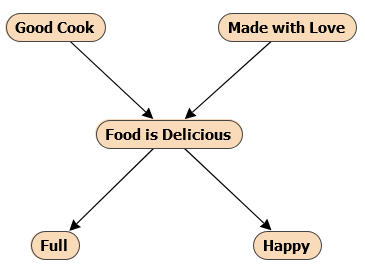

Let’s focus on the indirect relationship between coin toss and travel time to see if it causes a problem. We’ll simplify our model and focus just on energy as our only common influence on coin toss and travel time, removing everything else.1All the simplifications we make will be aimed at strengthening the indirect relationship and removing the direct relationship. Since we’re removing how we get to work, we’ll just assume we walk every time. The coin toss therefore now has no direct effect at all on travel time. Let’s also introduce the coin’s flipping speed (the number of times the coin rotates per second while in the air) explicitly, as this will be important later. This gives us:

We can reason that, if we have more energy, we’ll take less time to walk to work. Let’s measure energy on a scale from 0-10 (say, 0 meaning that you feel like you have the least possible energy, and 10 the most), and for this post we’ll say our energy is completely random day-to-day on this scale.2Being more precise, we’ll say that it’s uniform random on the continuous range 0-10, inclusive. The specifics about energy, how it’s defined and its distribution won’t be important. Let’s also assume the relationship between energy and travel time is very simple and deterministic. It might look like this if we graphed it:

That tells us it will take 21 minutes to get to work if we rate our energy level as a 0, 18 minutes if we rate our energy level as a 5, and 15 minutes if we rate our energy level as a 10.

What about energy‘s effect on coin toss? There’s two parts to this now: energy‘s effect on flipping speed, and then flipping speed‘s effect on the coin toss. It’s reasonable to suppose that the more energy you have, the more vigorous the flip and the faster the flipping speed. So let’s make that another simple and deterministic relationship:

So the coin might flip around 30 times per second if our energy is at its lowest, and 50 times per second if it’s at its highest.3Incidentally, the midpoint is very roughly in line with the average flipping speed measured in Persi Diaconis’s paper.

Great! We’ve now got just one more relationship to specify: how does flipping speed affect the coin toss outcome?

This one’s different — there isn’t a nice increasing or decreasing relationship. In fact, the coin toss outcome bounces back and forth, and will depend on how long the coin spends in the air and which side of the coin started up. We’re going to assume it stays in the air for 0.5 of a second and that it starts up heads,4Yes, deterministically. and that there are no other factors that affect the outcome. The coin toss now only depends (deterministically) on flipping speed. Very roughly, the relationship looks like this:

This relationship seems very different to our other two! If the flipping speed is 30 rotations per second, we would get heads. But if we increase it slightly to about 30.5, we’d get tails. Increase it again slightly to 31.5 rotations per second, and we’d go back to heads again. And so on as we keep increasing the flipping speed.

There is something really interesting about this relationship. The coin toss outcome can’t tell us whether the flipping speed was fast or slow. The outcome certainly tells us something about the flipping speed — say, if we get tails, we can rule out the ranges 29.5-30.5, 31.5-32.5, and so on, knocking out a 1 unit range of flipping speeds every 1 rotation per second. In fact, we can rule out about 50% of all the possible flipping speeds. But it doesn’t tell us whether the flipping speed was fast or slow — a tail is still equally likely to have been due to a flipping speed below 40 (slow) as above 40 (fast).

Even more interestingly, the average flipping speed doesn’t change after learning the coin toss outcome. The average flipping speed remains at 40 whether the coin turns up heads or tails. In this case, this also means the average energy level doesn’t change, which in turn means the average travel time doesn’t change.5If the relationship between energy and flipping speed were more complex, it might change. But we won’t dive into those complexities here. And ultimately, this means that that there is no association between coin toss outcomes and average travel times, even if there is an association between coin toss outcomes and the distribution of travel times.6In this case, there won’t even be an association with the standard deviation of measured travel times, or other moments of the travel time distribution. All these statements are approximations, but the approximation can be made arbitrarily good by increasing the number of head/tail alternations within the flipping speed range — which could be achieved relatively easily by simply increasing the size of the flipping speed range itself.

Being very strict with our language, this means energy is a common cause (and a very strong one), but not a confounder! This seems surprising at first, since energy completely determines both the coin toss outcome and the travel time. If we don’t measure energy, won’t it cause problems for everything else? But the determinism between energy and coin toss only goes one way. In the other direction, the coin toss outcome gives limited new information about flipping speed and energy, and no new information at all about the average flipping speed and energy.

In this sense, it’s not just any kind of sensitivity that contributes to randomness — it’s specifically when every small part of the input (or cause) space maps to the same part of the outcome (or effect) space repeatedly. In this case, every small change in flipping speed (say, +1 rotation per second) can produce both possible coin toss outcomes (heads and tails). This kind of sensitivity turns a deterministic relationship in the causal direction into a weak relationship in the other direction. In fact, in the case of the average, there is no relationship in the other direction.

We can very much call this a one-way relationship. One-way relationships are especially useful for us because they allow us to cut out other influences (that probably aren’t one-way) without simply introducing a new confounding problem.